In part one of this two-part report on data security, we found that software vulnerability is the most common cause of a data breach in Australia. In this article, we find out how IT professionals are investing in AI to enhance cyber defences and restrict the proliferation of data breaches. Above all, IT professionals in Australia are optimistic that AI can enhance cyber defences encouraging protection against cyberattacks.

In this article

- Most Aussie IT professionals believe AI will help enhance defences not attacks

- IT security spending in Australia is higher than the global average and nearly all IT professionals are investing in AI

- 96% of Aussie IT professionals are using AI-assisted cybersecurity tools

- Plan, prioritise, and prepare for successful AI implementation in cybersecurity

Artificial intelligence in cybersecurity can be both an emerging threat and an opportunity. Nevertheless, the overall sentiment among IT professionals on using AI in cybersecurity is positive rather than negative, with respondents highlighting top benefits such as advanced malware detection and real-time monitoring. These findings featured amongst many found in GetApp’s 2024 Data Security Survey, which studied the responses of 4,000 respondents in 11 countries and featured answers from 350 Australian participants.*

An increasing number of firms are now considering leveraging AI in cybersecurity. Various signs indicate their readiness to embrace these systems, including their interest in investing substantially in their use over the coming months. Our research shows that professionals see a clear value in using AI to secure their networks, cloud resources, and data. However, it is important for IT leaders to plan for this adoption carefully, taking into account the necessary use cases, preparing the dataset that will be used, and providing enough human guardrails.

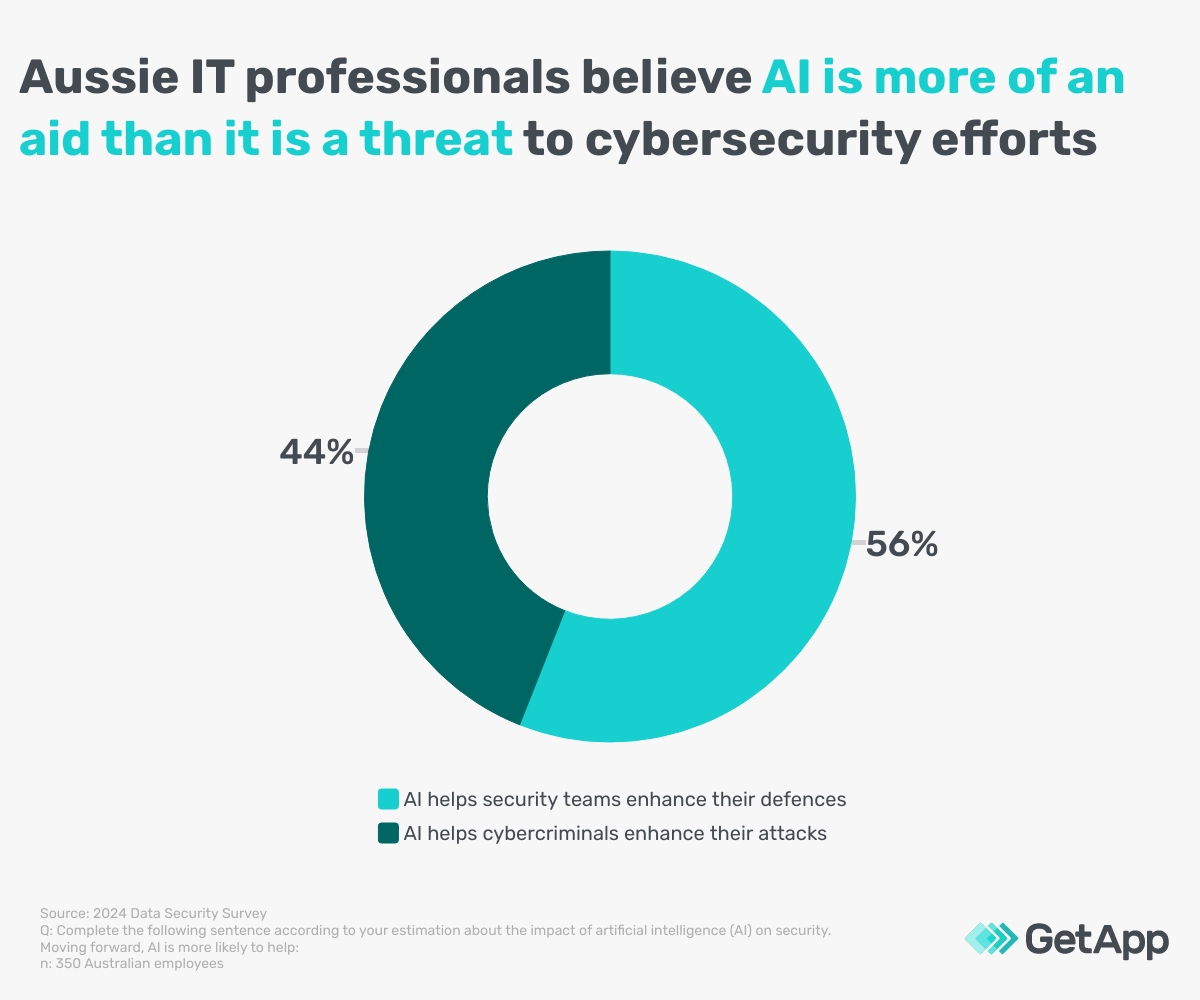

- 56% of Australian IT and data security professionals see AI as a greater ally than a threat.

- 91% of Aussie respondents expect spending on cybersecurity to increase in 2025.

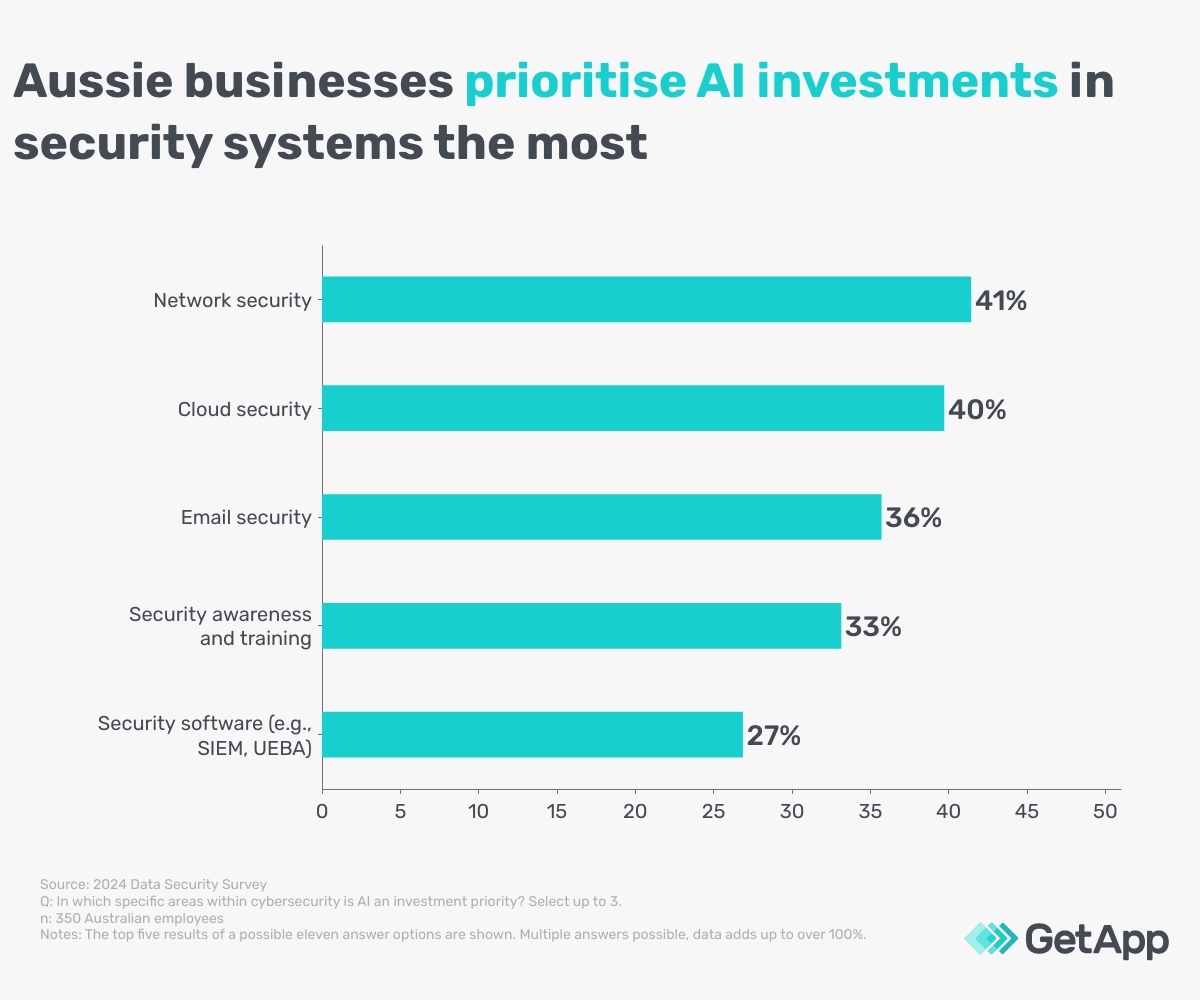

- AI solutions for network security (41%) and cloud security (40%) are considered the biggest investment priorities in Australia.

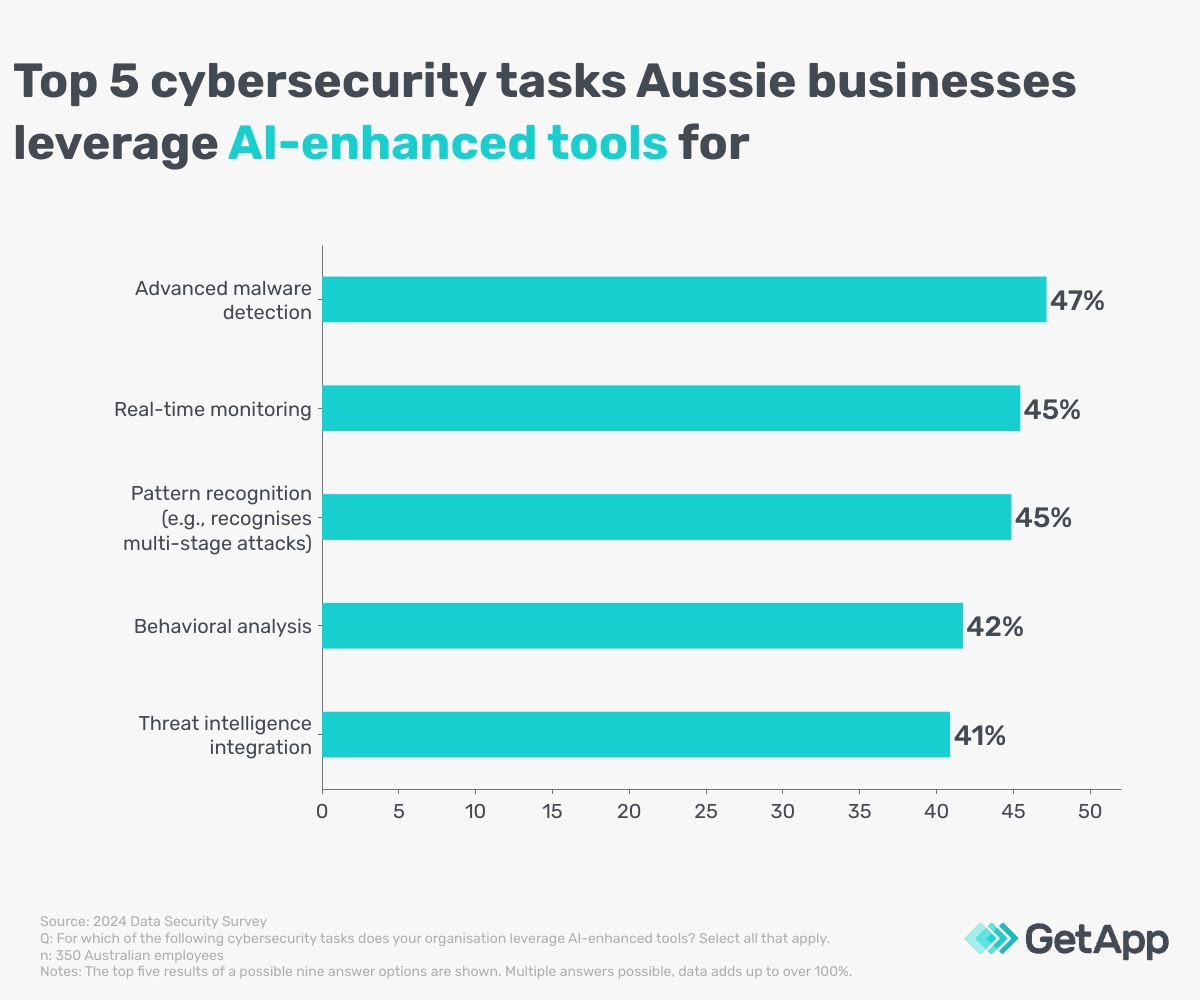

- 47% of Aussie respondents use AI tools for advanced malware detection.

Most Aussie IT professionals believe AI will help enhance defences not attacks

AI technology has often garnered a bad reputation in cybersecurity. This is partly due to its misuse to generate more potent phishing emails and deepfake impersonation of staff including other ways to expose vulnerabilities faster. However, there’s a lot more to AI than bringing harm to businesses.

Various facets of AI, such as machine learning [2], neural networks [3], natural language processing (NLP) [4], and deep learning [5], can present opportunities for cyber defence. They can also prove highly useful for automating tasks and helping systems identify threats more precisely.

This implication of considering AI as an opportunity rather than a danger in security is clearly seen amongst the cybersecurity participants of our survey. In Australia, for instance, 56% of participants see a greater potential for artificial intelligence to boost business cybersecurity defences rather than solely create new vulnerabilities or enhance attacks.

Australian respondents are slightly less optimistic than the global average about AI helping security defences (62%) and more pessimistic about it helping cybercriminals enhance their attacks (38%).

These signs indicate businesses’ desire to embrace AI rather than seeing it as the cause of worse threats. However, it’s worth considering that the fear of AI's malicious uses could drive the interest in more robust network monitoring and automation solutions in cybersecurity that AI itself provides the solutions for.

IT security spending in Australia is higher than the global average and nearly all IT professionals are investing in AI

With the findings suggesting optimism about AI's potential in cybersecurity defence, it’s worth unpacking what inspires such confidence. As already mentioned, AI is multifaceted and can influence many areas of cyber defence. This affects how companies choose systems and prioritise their security spending accordingly.

We looked at the areas companies aim to prioritise when implementing AI systems in cybersecurity. In terms of investments, the top priorities identified by Australian respondents centre on monitoring across essential areas such as networks, cloud services, and emails. Additionally, IT professionals suggest their companies see the use of AI to detect cybersecurity threats more widely as a necessity.

This is also widespread behaviour with 98% of Australian respondents identifying at least one AI investment priority and only 2% choosing none of the available options.

Another element that will affect AI investment is spending dedicated to cybersecurity more generally. Looking at year-on-year figures, this is currently high and expected to remain high into 2025 compared to the global average.

The ongoing commitment to security spending is a worthwhile step to get ahead of some of the considerable threats detailed in our sample. However, the desire to raise and maintain spending is also likely to be driven by a willingness to stay security compliant more generally, avoid falling behind competitors, and capitalise on the investment priorities noted above.

96% of Aussie IT professionals are using AI-assisted cybersecurity tools

As cyberthreats become more advanced, IT professionals need constant mechanisms to detect threats and monitor systems. AI-assisted threat detection and security monitoring can reduce some of that workload. We’ve already seen that AI investment is primarily focused on tools to assist with security monitoring. This emphasis on monitoring and detection appears to be reflected amongst those who have already adopted AI-powered security systems.

In total, 96% of Australian respondents are using AI-assisted cybersecurity tools in some capacity, with most using them for a variety of threat detection. Amongst Australian firms advanced malware detection stood out as the top use, with real-time monitoring also proving popular amongst companies.

Plan, prioritise, and prepare for successful AI implementation in cybersecurity

While there are solid reasons to introduce AI into a cybersecurity plan in the near future, the process shouldn’t be rushed, even if time is imperative.

Integration of AI into a business’s cybersecurity defences can be a long process and it’s important to factor this into planning.

A recent article by Gartner identifies four key areas of focus for getting firms’ IT ready to leverage AI. These include defining the use of AI tools, assessing deployment necessities, making data ‘AI-ready,’ and adopting AI principles. [1] To help achieve these steps, we’ve highlighted three tips below to ready your firm for AI cybersecurity implementation.

1. Plan: Identify where and how AI can enhance IT security

The first step to any AI deployment is to set goals for its usage. Having clear goals for this usage can help organise preparations for implementation and plan the use of staff and resources more effectively.

It’s better to prioritise areas where AI can help drive better protection of systems that need constant surveillance. As our data shows, this applies primarily to network security, cloud security, and threat detection.

Another important consideration is to check how this will affect the organisation’s current tech stack. Based on changing business needs and market trends, businesses have to decide whether to opt for a new software entirely or adopt unutilised features of an existing system. In many cases, businesses can add AI features to an existing security system suite by introducing new features and tiers to their existing software.

2. Prioritise: Develop a human-in-the-loop (HITL) approach

The use of machine learning and deep learning automations in cybersecurity isn’t quite as contentious as other areas where AI can be used, such as the application of generative AI in marketing. However, whilst monitoring and automation of cybersecurity can help IT teams save time and enhance protection, human intervention is necessary to avoid errors that a machine could miss due to faulty programming or limited capabilities.

A human-in-the-loop approach can help ensure smooth operations even with most AI-managed tasks, especially when considering AI deployment and applying ethical AI principles. Human decision-making should still be able to override AI and allow a person to act on threat intelligence manually when needed. Additionally, businesses should set clear guardrails to avoid improper data use and stay compliant with regulations.

To get ready for the use of AI in a company, firms will need to provide sufficient security training courses that empower staff to use AI tools effectively. This should focus on how and where human intervention is needed, how to remain data compliant when using data for AI training, and a technical understanding of identifying bugs when managing AI.

3. Prepare: Ensure data is AI-ready

Using AI for effective results requires users to input quality data into the system. This information needs to be organised and readable to help the AI system carry out its tasks more accurately and reduce performance errors. There are a few key factors to focus on to get data AI-ready.

Data management and data governance are highly important to AI adoption. The data that can be accessed and used by a system must be checked carefully and organised into an error-free, readable, and uniform format for an AI system to put it to effective use.

Once data is prepared for use by an AI process, there is an important decision to be made on whether to use a system fed with information primarily from public datasets. Companies can simply opt to use their own in-house data sets exclusively. Alternatively, they can partially or entirely use proprietary data sets belonging to the software maker providing the AI system. Managing the data process in-house can be more challenging and expensive, but it also provides a more bespoke service for the user.

Protecting any data you share with the system is also highly important. In theory, AI-assisted cybersecurity software should take care of much of that but there are still ways that data could be compromised. For example, data poisoning can make a secure system more vulnerable to attacks (a factor that 38% of Australian respondents noted as a concern).

Methodology

*GetApp’s 2024 Data Security Survey was conducted online in August 2024 among 4,000 respondents in Australia (n=350), Brazil (n=350), Canada (n=350), France (n=350), India (n=350), Italy (n=350), Japan (n=350), Mexico (n=350), Spain (n=350), the U.K. (n=350), and the U.S. (n=500) to learn more about data security practices at businesses around the world. Respondents were screened for full-time employment in an IT role with responsibility for, or full knowledge of, their company's data security measures.

Sources

- Get AI Ready: Action plan for IT Leaders, Gartner

- Machine Learning, Capterra

- Neural Networks, Gartner

- Natural Language Processing (NLP), Capterra

- Deep Learning, Gartner